A blood-red helicopter swooped low over a French village at the foot of Mont Blanc, dangling what looked like a miniature wrecking ball. Renaud Keriven feared that his enthusiasm for the project – for the chance to change how we see and study the world – had led him into a career-ending faux pas. “It was insane. Completely unauthorized,” he recalls. “But if we hadn’t been a little crazy, nothing would have happened.”

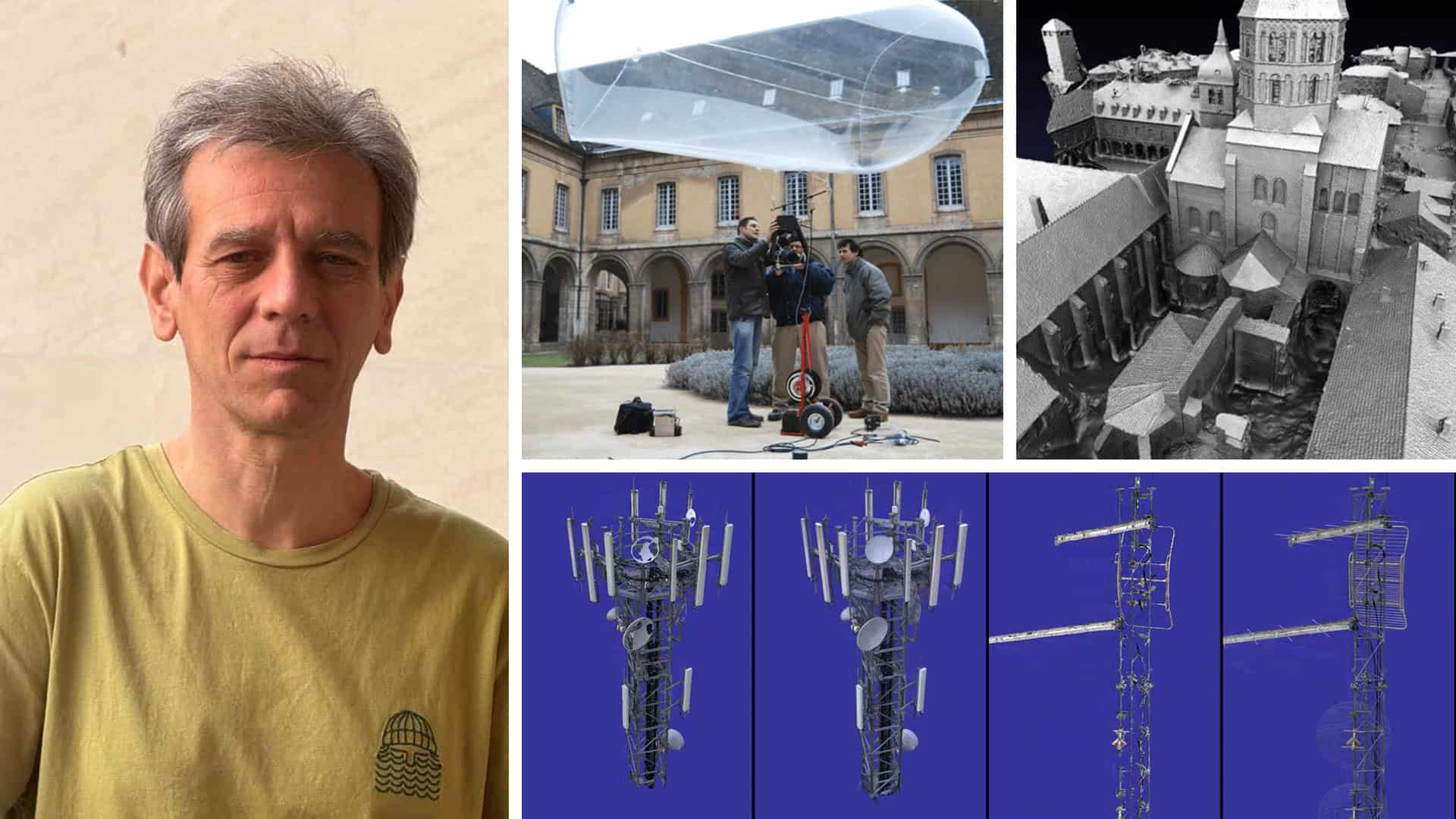

That “wrecking ball” was a 50-kilogram sphere stuffed with 25 cameras – an improvised aerial capture system designed to photograph the village from every angle. In just three minutes, Keriven and his fellow guerilla researchers from École des Ponts ParisTech had captured what they needed. A full week of computation later, the result was a detailed 3D model of the entire village. “It felt fantastic,” says Keriven.

That was in the late 2000s, the swashbuckling beginnings of “reality capture.” Today, Keriven is a distinguished engineer at Bentley Systems, the infrastructure engineering software company, helping shape the future of digital infrastructure and infrastructure digital twins. But his mission remains unchanged: to teach machines how to make visual sense of the world – and reconstruct it in 3D. What started as a daring academic experiment is now transforming how we design, monitor, and maintain the built environment.

From telecom towers to railways, bridges to entire cities, digital twins of built assets depend on reality capture to stay precise and useful. In turn, reality capture relies on the technologies that Keriven has spent decades inventing, refining, and scaling – that are now supercharged by the latest advances in machine learning and artificial intelligence (AI). Today, he is a leader in the brave new world of Gaussian splatting, a breakthrough technique that’s rapidly gaining traction across the 3D graphics world.

From Stereo Vision to Photogrammetry

Born in 1966 to a working-class family, Keriven was the first in his family to receive a formal education. A gift for mathematics won him entry to France’s most elite engineering schools. He chose École Polytechnique – often considered the pinnacle of French scientific education – before specialising at École des Ponts ParisTech in applied mathematics, pattern recognition, and the then-emerging field of machine vision.

In the early 1990s, a key goal of machine vision was to unlock space exploration. “If you want to send a rover to Mars, you can’t remote-control it. It’s too far away,” says Keriven. “It has to be autonomous, and for that it needs vision.”

The foundations of computer vision lie in centuries of mathematical thinking about space, geometry, and perception. Keriven and his peers turned that theory into practice, designing algorithms that enabled computers to extract depth, structure, and spatial relationships from images.

A critical breakthrough came in teaching machines to mimic depth perception – using two slightly offset images to triangulate the 3D location of a point in space. The hard part was getting computers to identify the same point across both images. “Finally, in the mid-90s, we were able to do that, quite slowly, in a machine,” Keriven recalls. “We’d feed photos into it, and it would output a rough 3D sketch of these points in space.”

It was the beginning of photogrammetry: the science – and art – of extracting 3D data from 2D photographs.

Into the Great Wide Open

Long before today’s data-rich digital twins of cities and infrastructure, two very different communities were trying to extract shape from images. In university labs, Keriven and his peers worked with fixed cameras, small objects, and tightly controlled conditions. Surveyors, by contrast, operated in the “real world”, capturing overlapping photographs from aircraft and manually matching points across images to generate elevation maps.

But while one group relied on structure and standards, the other thrived on experimentation. And as computing power grew, so did Keriven’s ambition. “Soon, we were able to create models not just from small objects in a lab, but from real life,” Keriven says. In other words, the computer-vision crowd was heading outside. “Surveyors were engineers; we were scientists… And then we met in the middle.”

For Keriven, by now a head of the Computer Vision lab at Ecole des Ponts, going outside meant a series of guerrilla photography expeditions. In those days, before drones and lightweight digital cameras, he bet big on a balloon. A helium-filled balloon 5 meters in diameter, to be precise, with a €50,000-camera tied underneath it. “We used it on the grounds of a very old monastery in France,” he recalls. Up it went on a string, to 150 meters. “We got images no one else had.” The result? A richly detailed 3D model of the monastery.

The helicopter captures followed soon after, including Aiguille du Midi – a towering peak overlooking Chamonix.

Making the Leap: Founding Acute3D

As digital cameras improved, computers became more powerful, and drones entered the picture, the potential of reality capture began to rapidly expand. By 2011, Keriven was a professor working in public research, dreaming of modeling all of France in 3D. But unable to get institutional support, and desperate to see his technology applied, he took matters into his own hands. With his student, Jean-Philippe Pons, he co-founded Acute3D in 2011 in Sophia Antipolis (aka the French Silicon Valley) and Paris. “We were naive,” he says.“We did it using our own money, basically in a garage.”

Naive or not, their pioneering technology caught on quickly. Acute3D’s software, Smart3DCapture, turned sets of digital photographs into high-resolution 3D “reality meshes” – textured, engineering-ready surface models. It was scalable from single buildings to entire cities. Acute3D quickly found international users across construction, mining, telecoms, and even Formula One. “At the time, our clients didn’t do much with their models,” Keriven admits. “Mostly, they were just happy to have them – they looked so beautiful on their websites.”

In business, says Keriven, “you grow or you die.” And after growing the company to 15 staff, they reached a decision point: find venture capital funding to scale up or find a buyer. “We decided we’re not startup guys,” says Keriven. “We’re scientists.” They chose to sell to Bentley Systems because, as software-focused mathematicians, they wanted a partner who shared their technical DNA, understood their vision, and wanted to get this technology into as many hands as possible. “It was a perfect match for us,” Keriven says.

A platform for progress

When Bentley acquired Acute3D in 2015, Keriven and Pons continued to develop their technology. Their software evolved into ContextCapture, and later, iTwin Capture. It is now a cornerstone of Bentley’s iTwin platform for infrastructure digital twins.

But reality meshes were only the beginning. After joining Bentley, Keriven turned to machine learning, which was rapidly becoming the heart of computer vision research. Machine learning adds a new layer of intelligence to 3D infrastructure models – automatically detecting and labeling features like antennas, road signs, cracks, or trees. 3D models were already visual and measurable – but with machine learning, they become searchable, interpretable, and vastly more useful.

Machine learning can also track changes – flagging new construction, damage, or deterioration over time. “We’re working hard on change detection,” says Keriven. “To automatically spot what’s changed between two image acquisitions and update the digital twin.”

The usefulness of a 3D model also hinges on how easily it can be navigated – especially at city or network scale. Before joining Bentley, Acute3D developed a technique for efficiently streaming large 3D models – loading only the relevant parts, at just the right resolution, as users moved through them. When Keriven presented the idea to Bentley co-founder Ray Bentley, Ray immediately took it upon himself to integrate it into MicroStation, a foundational Bentley product, and did it within three weeks. “I had a friend at a rival company whose similar technology still hadn’t been adopted after five years!” Keriven says.

Later, when Keriven encountered Cesium’s new 3D Tiles, he immediately saw the potential – not just as a technically elegant solution, but as an open format that aligned with Bentley’s commitment to interoperability. Cesium, then an independent startup focused on high-performance 3D geospatial visualization, had created 3D Tiles to stream massive datasets smoothly in web browsers. Bentley made the switch, establishing 3D Tiles as a foundational element of the iTwin platform. In 2019, the Open Geospatial Consortium made 3D Tiles an official community standard, and in 2024, Bentley acquired Cesium.

Enter the splats

Reality capture is evolving again – this time with Gaussian splatting, a breakthrough technique that’s rapidly gaining traction across the 3D graphics world. The technique bears the name of the 18th century German mathematician Carl Friedrich Gauss, the father of the bell curve probability distribution. Originally developed by graphics researchers to create seamless 3D scenes from a handful of photos, splats offer a new way to represent 3D data. Not point clouds, not meshes – but translucent ellipsoids that build on Gauss’s probabilistic functions and, layered together, reconstruct surfaces with striking realism.

Keriven had a front-row seat on the technique’s development. “The inventors of Gaussian splats, led by George Drettakis at INRIA, worked in the lab next door to ours, so we were always talking,” he says. “The success of their approach has been overwhelming.”

Splats are built from the same inputs as reality meshes: photos, drone scans, LiIDAR. What makes them particularly promising for infrastructure is that they handle fine details with ease, such as telecom wires, antennas, scaffolding, and foliage, which traditional meshes struggle with.

Bentley has already released early tools to stream splats for small buildings, with city-scale streaming expected by the end of 2025.

Splatting is an open technique, but without a common format, interoperability can break down. That’s why Keriven, along with Cesium, is helping shape an official standard through the Khronos industry group – so splats can be published, streamed, and easily reused across platforms.

One button to map the world

Asked what’s next, Keriven doesn’t hesitate: automation. “I would really like reality capture to be completely automated, including the hardware,” he says. “So you just press a button, and a drone takes off and takes every picture. And at the end, with no effort, you have everything available in the cloud – the mesh, the splats, the machine learning labels.”

Beyond infrastructure, he imagines nothing less than a living 3D model of the world, updated by entire communities, like a crowdsourced, photorealistic Google Earth, built from the ground up. “I want everyone to be able to have a 3D model if they want one,” he says. “So many applications are stuck because you need an expert to create a high-quality 3D model. We have to kill that.”

For Keriven, the ultimate goal isn’t just better 3D models – it’s a more visible, accessible world for everyone.